Sick of the endless loop of writing, breaking, and then fixing fragile end-to-end tests? Agentic QA is a fresh take that uses AI agents to autonomously test your app. It moves beyond rigid scripts to focus on what the user actually wants to accomplish, freeing your team from the grind of test maintenance so you can ship features faster without skimping on quality.

Moving Beyond Brittle Tests with Agentic QA

For years, quality assurance has felt like being stuck on a hamster wheel. Teams painstakingly craft test scripts with frameworks like Cypress or Playwright, only to watch them crumble at the slightest UI tweak. A button ID gets updated, a CSS class is added, or a layout gets a minor shuffle, and suddenly your whole test suite is bleeding red. This turns what should be a safety net into a massive source of technical debt.

This constant upkeep is a huge drain, especially for agile teams and startups where moving fast is everything. Every hour spent digging through code to fix a broken selector is an hour you’re not spending on building your next big feature. It's an inefficient, frustrating process that feels like you're fighting your tools instead of using them.

The Old Way: A Rigid GPS

Think of traditional test automation like one of those old-school GPS devices from the early 2000s. You had to punch in a very precise, turn-by-turn route: "Drive 500 metres, turn left on Main Street, drive 200 metres, then turn right on Oak Avenue."

If you hit an unexpected road closure—or in our world, a new pop-up modal, a changed element ID, or a component that's slow to load—the whole thing just gives up. It's incapable of adapting. It only understands the exact sequence of commands it was fed, forcing you to pull over and manually reprogram the entire journey from scratch.

The Agentic QA Approach: An Experienced Driver

Now, picture an experienced human driver. You don't give them step-by-step directions. You just tell them the destination: "Take me to the city library." They understand the goal. Using their own intelligence, they navigate the city, intuitively finding detours around traffic jams or unexpected construction. That, right there, is the core idea of agentic QA.

You stop telling the machine how to do something and start telling it what you want to achieve. This fundamental shift from imperative commands to outcome-focused instructions is what agentic testing is all about.

This visual from e2eAgent.io really drives home the idea of intelligent navigation, where the focus is on the destination, not the specific path you take to get there.

The image perfectly captures an agent navigating a complex environment to reach a goal, much like an AI agent finds its way through a web application to complete a user journey.

So, How Does Agentic QA Actually Work?

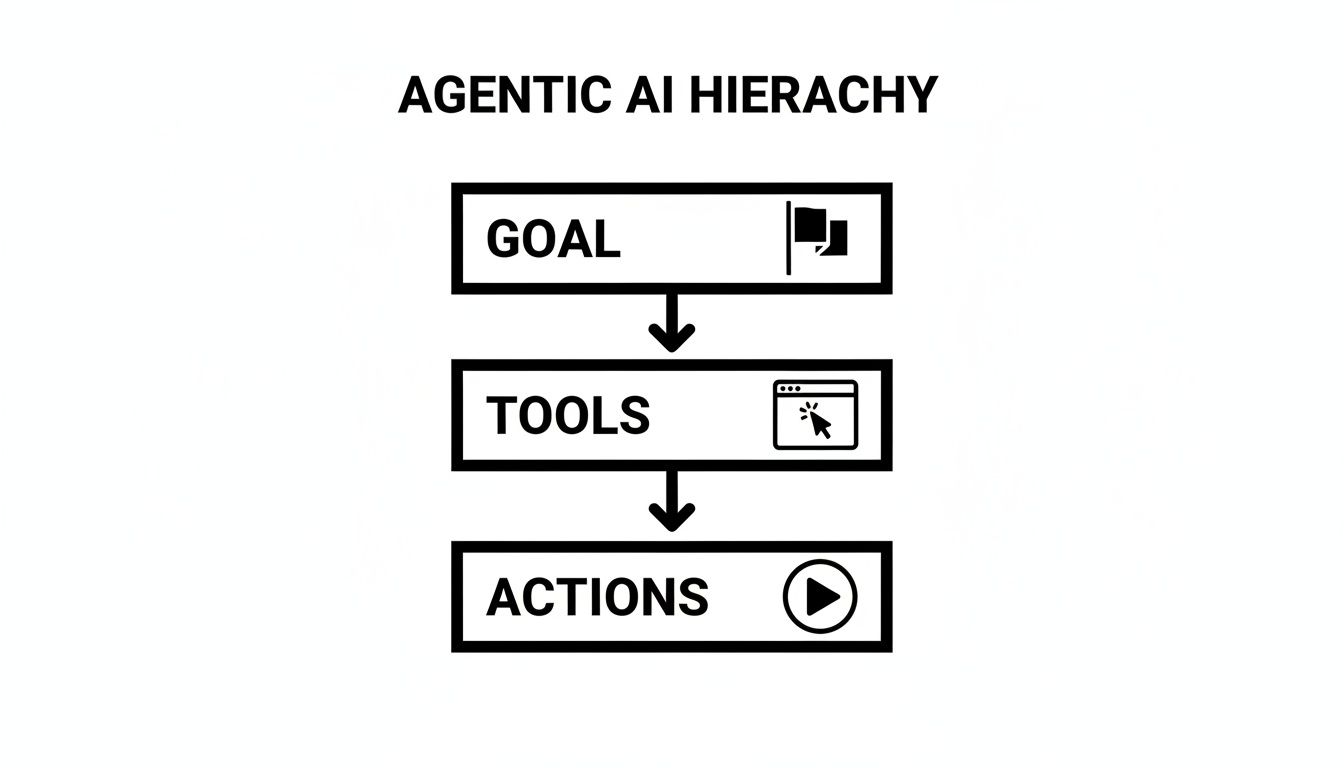

At its heart, agentic QA completely flips the script on how we think about test automation. Instead of writing rigid, step-by-step code, you simply give an AI model a high-level goal. You provide the tools, like a web browser, and then grant it the autonomy to figure out how to get the job done.

Think of it like this: the agent is the AI, the goal is the user journey you want to test, and the tool is the browser it uses to interact with your app. You just describe what a user needs to achieve in plain English.

The AI agent takes your instruction, looks at the current state of your application’s UI, and translates your goal into a sequence of actions. It then performs these actions—clicking buttons, filling out forms, and navigating between pages—just like a person would, all while observing the results to make sure each step was successful.

The Shift from Code to Intent

The real breakthrough with agentic QA is its focus on intent rather than implementation. Traditional test automation forces you to get bogged down in the technical minutiae of the UI. You spend countless hours hunting for specific CSS selectors or XPath locators that are fragile and prone to breaking the moment a developer makes a small change.

With an agentic approach, you can step back and focus on what really matters: the user's objective. This fundamentally changes how you create and maintain tests.

You're no longer writing code that says, "Click the button with the ID

#submit-checkout-btn-v2." Instead, you simply tell the agent, "Add the most expensive laptop to the cart and complete the purchase." The agent figures out the rest.

This shift means your team's most valuable skill is no longer their coding prowess, but their deep understanding of the product and its users. If you can clearly describe a user flow, you can automate a test for it.

Breaking Down the Agentic Process

Let's walk through a common scenario to see how this works in the real world. Imagine you want to test a new user registration flow.

Here’s how it would play out:

- Define the Goal: You start with a clear, natural language instruction: "Sign up for a new account using a unique email, then log out and confirm you can log back in with the new credentials."

- Autonomous Execution: The AI agent opens a browser, navigates to your app's sign-up page, and identifies the necessary input fields for name, email, and password. It intelligently fills them out, handles any CAPTCHAs that might pop up, and submits the form.

- Intelligent Verification: After submission, the agent observes the screen to verify success. It looks for confirmation messages, dashboard elements, or welcome pop-ups—the same visual cues a human tester would look for—to confirm the account was created.

- Multi-Step Reasoning: The agent then moves on to the next part of the goal. It finds the "Log Out" button, clicks it, and navigates back to the login page to test the new credentials. It confirms a successful login by looking for the expected landing page.

This ability to reason through multi-step journeys is what truly sets agentic QA apart. The agent doesn't just execute isolated commands; it maintains context and works towards a final objective. You can get a better feel for how this cognitive ability is reshaping the testing world in our deep-dive on AI in E2E testing.

The adoption of this technology is happening fast. Gartner forecasts that by the end of 2026, a staggering 40% of enterprise applications will use task-specific AI agents, a massive jump from less than 5% in 2025. For QA teams in Australia, this is a clear signal that intelligent automation is quickly becoming a business necessity, not just a nice-to-have.

Comparing Agentic QA to Traditional Frameworks

To really get a feel for what agentic QA brings to the table, it helps to put it side-by-side with the tools most of us are using today. Frameworks like Cypress and Playwright have been the go-to for end-to-end testing for years, but they’re built on a completely different philosophy. They're powerful, no doubt, but they also come with a set of trade-offs that are starting to feel like a real bottleneck for modern development teams.

At their core, traditional frameworks are all about code. To write a test, an engineer has to script out every single interaction, step by painstaking step. This script hunts for specific elements on a webpage using identifiers like CSS selectors or XPaths and then tells the browser exactly what to do with them.

This approach requires a specialised skillset, often turning test automation into a siloed job handled only by dedicated QA engineers or developers with the right know-how. The actual business goal—what a user is trying to do—gets lost in translation, turned into a list of brittle, technical commands that are hardwired to the application's code.

The Problem of Brittle Selectors

Here's where the old model really starts to show its cracks: it's incredibly fragile. Web apps are living things, constantly evolving. A front-end developer might make a seemingly small change, like updating a button's ID to match new coding standards, and suddenly dozens of tests shatter. The script looks for a selector that's no longer there, the test fails, and it has nothing to do with the actual feature being broken.

This is what we call test flakiness—when tests fail for reasons that aren't actual bugs. In fact, some studies show that QA teams can spend over 30% of their time just fixing and maintaining these broken tests. It’s a frustrating, reactive cycle that kills momentum and makes everyone lose faith in the test suite.

The agentic approach is designed to sidestep this entire problem by focusing on the 'why' instead of the 'how'.

This hierarchy shows how the agent starts with the user’s goal. From there, it figures out the right tools and actions needed to get the job done on its own, making it resilient to all those superficial UI changes that would break a traditional script.

A Head-to-Head Comparison

Let's break down the key differences more directly. While traditional frameworks give you pinpoint control, agentic QA is all about resilience and making testing accessible to more people. This simple shift makes it a seriously compelling option for teams trying to move faster without cutting corners on quality.

Agentic QA vs Traditional E2E Frameworks (Cypress/Playwright)

Here’s a look at how the two approaches stack up in the areas that matter most.

| Attribute | Agentic QA | Traditional Frameworks (Cypress/Playwright) |

|---|---|---|

| Test Creation | Uses natural language. Describe the user journey in plain English. | Requires writing code (e.g., JavaScript/TypeScript) and using selectors. |

| Maintenance Effort | Very low. The AI agent adapts to most UI changes automatically. | High. Tests frequently break due to changes in element IDs or selectors. |

| Required Skills | Product knowledge and the ability to describe user flows. | Specialised coding skills and deep knowledge of the framework. |

| Test Flakiness | Minimal. Focuses on user intent, not brittle implementation details. | Common. Highly sensitive to UI code changes, leading to flaky tests. |

| Adaptability | High. Intelligently navigates the UI, even if layouts or elements change. | Low. Tightly coupled to the DOM structure; requires manual updates. |

The table really tells the story. The true cost of owning a traditional test suite is often much higher than you'd think, thanks to all the hidden maintenance work and the need for specialised skills.

By abstracting away the code, agentic QA democratises testing. It empowers product managers, manual testers, and business analysts to contribute directly to the automation suite, leveraging their deep user-centric knowledge.

This doesn't just widen the pool of people who can help; it also makes sure tests are directly tied to real business goals. For teams that feel like they're constantly playing catch-up, exploring AI-based test automation can be a pathway to a more sustainable and effective quality process.

Ultimately, it comes down to what your team needs. If you require absolute, granular control over every browser action, a traditional framework might still be the right choice. But if your goal is to build a robust, low-maintenance test suite that reflects how real users behave and can actually keep up with development, agentic QA offers a much smoother path forward.

Putting Agentic QA to the Test: Real-World Scenarios

Theory is great, but the real magic of agentic QA becomes clear when you see it tackle the messy, unpredictable tests that trip up traditional scripts. Let's move past the concepts and look at a few common scenarios where an AI agent can solve problems that would otherwise have engineers tearing their hair out.

The beauty is in the simplicity—the instructions are written in plain English, just like you'd ask a human colleague to test something.

This hands-on approach shows that agentic testing isn't some far-off idea; it's a practical tool that helps today's product teams move faster. The ability to turn a simple request into a series of complex, intelligent actions is what makes it so powerful.

Testing a Complex User Onboarding Flow

Onboarding is your first handshake with a new user. It's a critical journey, often packed with multiple steps, email verifications, and logic that changes based on user input. A traditional test script for this is usually a monster—long, complicated, and fragile.

Here’s how an agent handles it instead:

- The Prompt: "Go to the homepage and sign up for a new 'Pro' trial account using a brand new email. Go through all three steps of the onboarding tour. Once that's done, head to the settings page and check that the account plan is listed as 'Pro Trial'."

The AI agent gets the goal and just starts working. It finds the sign-up button, generates a unique email, and fills out the form. When the multi-step tour pops up, it knows to click "Next" or "Continue" until it's finished, without caring about the exact button text or where it is on the screen.

Finally, it locates the settings area, navigates there, and scans the page to confirm the "Pro Trial" text is present. It can even handle things like waiting for a confirmation email without you needing to code in explicit waitFor commands.

Validating a Dynamic E-commerce Checkout

E-commerce checkouts are a minefield for old-school automation. You've got products going in and out of stock, promo codes, and shipping options that change on the fly. Scripting every possibility is a nightmare, but an agent navigates this chaos with ease.

- The Prompt: "Go to the electronics category, find the priciest wireless headphones you can, and add two pairs to the cart. Go to checkout, pop in the discount code 'SUMMER20', and make sure the total price has dropped. Finish the purchase with the test credit card details."

An agentic QA tool doesn't need a specific product ID. It understands concepts like "priciest" and can visually scan the page to find the right item, add it to the cart, and move on.

It then applies the coupon and, crucially, performs a logical check. It verifies the final price is lower than the subtotal, confirming the discount actually worked. This is far more robust than checking for a specific dollar amount, which could change tomorrow.

Verifying a Multi-Step Data Submission Form

Forms with fields that appear and disappear, file uploads, and fiddly date pickers are another classic headache. An agent handles these dynamic elements just like a person would, which makes the tests more realistic and a whole lot more reliable.

- The Prompt: "Log in as a test user, go to the 'Submit Report' page, and fill out the form. Choose 'Financial' as the report type, which should make an 'End of Quarter' checkbox appear—make sure you tick it. Attach the 'report.pdf' file, pick next Tuesday from the calendar, and submit. Check that you see a success message."

The agent understands the cause and effect here. It knows selecting "Financial" triggers the checkbox to appear and will actively look for it. This kind of adaptability is becoming essential, especially as Australian enterprises embrace agentic AI at a stunning pace. We saw usage jump 50% in just three months between March and June 2025.

A recent survey revealed that nearly one in five Australians (18%) have already used agentic AI. This trend signals a huge demand for smarter testing solutions that can think more like a human. You can dig into the full findings on Australia's rapid AI uptake in this Adobe report.

By focusing on these real-world user goals, agentic QA helps teams build a test suite that is both resilient and genuinely meaningful, ensuring the app works exactly as customers expect it to.

How to Integrate Agentic QA into Your CI/CD Pipeline

Having a suite of smart, low-maintenance tests is a massive win, but you only unlock their true power when they run automatically as part of your development workflow. Plugging agentic QA into your Continuous Integration and Continuous Deployment (CI/CD) pipeline creates a powerful, automated safety net that catches regressions before they ever see the light of day.

The whole point is to create a rapid feedback loop. Every time a developer pushes new code, the agentic test suite should kick off automatically, giving clear, immediate feedback on whether the changes broke something. This frees up your team to ship new features with confidence, knowing a reliable guardian is watching over the core user experience.

And here’s the good news: unlike traditional frameworks that often demand complicated setup scripts, Docker containers, and a headache of dependency management, integrating agentic QA is usually much simpler. Most modern agentic platforms are built with an API-first mindset, which makes integration a surprisingly straightforward process.

Triggering Tests on Every Commit

The most common and effective place to hook this in is at the pull request (or merge request) stage. By adding a simple job to your CI/CD configuration file, you can trigger your entire test suite to run against the proposed changes.

This is often done with a single command-line interface (CLI) call or a simple API request. For instance, in a workflow file for GitHub Actions or GitLab CI, you’d add a step that looks something like this:

- Checkout Code: The first step is always to pull the latest version of the code from the feature branch.

- Install Dependencies: A quick command to install any necessary packages for your CI runner.

- Run Agentic Tests: A single line of code, like

e2eagent run-all-tests --api-key $E2EAGENT_API_KEY, is all it takes to kick off the entire suite.

The agentic platform then handles all the heavy lifting in the cloud, spinning up browsers and running the tests based on your plain-English instructions.

The key difference is simplicity. You're not managing browser drivers or wrestling with complex test environments inside your CI runner. You're simply telling the agentic platform, "go," and it takes care of the rest.

This lean approach keeps your CI/CD pipelines fast and clean. It cuts down on the potential points of failure and makes the whole process easier for any developer on the team to understand and troubleshoot. As you look at modernising your stack, you can explore how different AI testing tools handle CI/CD integration.

Reporting Results and Notifying Your Team

Once the tests finish, the final piece of the puzzle is getting the results back to your team where they’ll actually see them. A good integration makes this totally seamless.

- Status Checks: The agentic QA platform reports a

passorfailstatus directly back to the pull request in GitHub or GitLab. This gives you that immediate, at-a-glance visual cue on the build's health. - Detailed Reports: A link to a full test report is usually posted as a comment, letting developers click through to see exactly what failed, often complete with screenshots or videos of the test run.

- Slack/Teams Notifications: Using webhooks, you can set up instant notifications to a dedicated channel. This makes sure the whole team knows about a build failure the moment it happens, so you can jump on it straight away.

By embedding agentic QA directly into your CI/CD pipeline, you shift testing from something you do occasionally to a continuous, automated habit. This tight integration helps your team move faster, merge code with far more confidence, and ultimately, ship a much higher-quality product to your users.

Writing Effective Prompts for Agentic QA

Moving to agentic QA doesn't mean you stop thinking about test design; it just changes how you do it. While you're no longer writing lines of code, the quality of your instructions—your prompts—is everything. It’s what makes the difference between a reliable test and a frustrating failure.

Think of the AI agent as a new team member who's brilliant but takes everything you say completely literally. You need to communicate with absolute clarity, turning your high-level goals into a series of unambiguous, step-by-step actions. The agent can't guess what you mean or infer your hidden assumptions. Every critical step, every piece of data, and every expected outcome needs to be spelled out. This approach is powerful because it allows anyone, from manual testers to product managers, to contribute to a test suite that's robust and easy to maintain.

This skill is becoming more crucial every day. The agentic AI market is set to explode, growing from USD 7.06 billion in 2025 to a staggering USD 93.20 billion by 2032. Here in Australia, AI adoption has already jumped by 282%, putting pressure on lean teams to find smarter ways to test. Learning to write a great prompt is the key to unlocking the efficiency of this technology. You can read more about this massive market shift and what it means for Australian SaaS companies.

From Vague Ideas to Specific Instructions

The biggest trap people fall into is writing prompts that are too vague. An instruction like "test the login" leaves far too much to chance. What user should it use? What does a successful login even look like?

Let's look at a quick comparison to see what works and what doesn't.

- Bad Prompt: "Test the checkout process."

- Good Prompt: "Add the 'Pro Subscription' to the cart, go to the checkout page, and then complete the purchase using the test credit card details provided."

See the difference? The second prompt provides a clear, sequential user journey that leaves no room for misinterpretation.

Key Principles for Powerful Prompts

To get consistent, reliable results from your agentic QA platform, keep three core principles in mind. They’ll help you build tests that are both effective and easy for anyone to understand.

- Be Specific About User Intent: Don't just say what to do; say how to do it and with what information. Instead of "log in," your prompt should specify the exact username and password.

- Define Clear Success Criteria: How does the agent know a step worked? Tell it what to look for. This could be a "Success!" message popping up, a specific heading on the next page, or seeing a new item appear in a list.

- Break Down Complex Journeys: If you're testing a long or complicated user flow, don't try to cram it all into one sentence. Break the journey down into logical, sequential steps. This not only helps the agent process the test correctly, but it also makes it much easier for your team to troubleshoot if something goes wrong.

A good rule of thumb: write a prompt so clear that a human tester who has never seen your application before could follow it perfectly. If it passes that test, the AI agent will have no trouble executing it.

By getting these simple principles right, you can translate your product knowledge directly into a powerful and resilient test suite that actually keeps up with your development pace.

Got Questions About Agentic QA?

Any time you introduce a new way of working, questions are bound to pop up. Moving on from tools you know inside and out always feels like a bit of a leap. But once you get a handle on what this new agent-driven approach is all about, you'll see a clear path forward for your entire quality process.

Let's dig into some of the most common questions we hear from teams making the switch.

Will This Actually Replace Our Cypress Tests?

The short answer is yes. In fact, it often provides a much more resilient alternative. Traditional tests are notoriously brittle; they rely on fixed selectors, and the moment a developer changes a button's ID or class, the test breaks. It’s a constant maintenance headache.

Agentic QA is different because it’s built for change. The AI agent doesn't just look for a specific selector; it understands the intent behind the test step. If your test says, "Click the login button," the agent can still find it even if the button's text, colour, or underlying code changes. This simple shift in thinking dramatically cuts down on the flaky tests that so often clog up CI pipelines.

A smart way to start is to augment your existing suite. Use agentic tests for new features first, then circle back and slowly replace your most fragile legacy tests.

This isn't just about replacing old tests—it's about upgrading your team's entire approach to quality. You start focusing on user outcomes, not implementation details. This means fewer false alarms from minor front-end tweaks and more time spent on what matters.

How Does It Handle Complex Scenarios?

This is where agentic QA really shines. Modern applications are rarely simple, and agentic platforms are designed for that real-world messiness.

Need to test a sign-up form with dynamic data? No problem. You can just tell the agent something like, "generate a random email address and use it to complete the signup form."

And what about those tricky interactions, like third-party logins? The agent can be guided through an entire OAuth flow, like a 'Login with Google' button. It handles pop-ups, redirects, and multi-step authentications just like a person would—a massive advantage over older frameworks that often stumble on cross-origin interactions.

What Skills Does My Team Need to Get Started?

This is probably the biggest win for most teams. You don't need a squad of highly specialised automation engineers who live and breathe code. The most important skill is a deep understanding of your own product and how your users interact with it.

If you can describe a user journey in plain English, you can write a powerful, reliable automated test. This opens up the world of test automation to everyone on the team—product managers, manual QAs, even folks from customer support can jump in and contribute. This democratisation of testing naturally leads to better test coverage and, ultimately, a much higher-quality product for your customers.

Stop wasting time fixing broken Cypress and Playwright scripts. With e2eAgent.io, you just describe your test in English, and our AI agent handles the rest. See how you can build a resilient, low-maintenance test suite by visiting https://e2eagent.io.