End-to-end testing is all about checking an application’s entire workflow from start to finish, exactly how a real person would use it. It makes sure every single piece of the puzzle—from the frontend interface you see, to the backend databases and APIs you don't—all work together perfectly. Think of it as the ultimate quality check before your software goes live.

What Is End to End Testing and Why It Matters

Picture a customer on your e-commerce site. They’re not thinking about your databases, microservices, or APIs. All they care about is whether they can find a product, pop it in their cart, pay for it, and get that confirmation email. End-to-end testing, or E2E testing for short, is the only way to mimic that complete user journey and confirm the whole system is in sync.

It helps to think of it like building a car. Other types of testing look at the individual parts:

- Unit tests are like checking if a single spark plug fires correctly.

- Integration tests check if the fuel pump and the engine work together.

- End-to-end testing is getting in the driver's seat, turning the key, and taking the car for a spin around the block. It’s the only way to know for sure that the entire vehicle works as one cohesive unit.

The Last Line of Defence

E2E testing is your final, most important quality gate before you deploy. While other tests are brilliant for catching bugs early on at the component level, they often work in isolation. A subtle bug in one microservice might only surface when it gets a specific piece of data from another—a scenario only an E2E test can reliably catch.

Ultimately, these tests answer the one question every business needs to ask: Does our application actually work for our users?

End-to-end testing gives managers a clear picture of how every part of the application interacts in the real world. This insight is gold when it comes to prioritising fixes and features that will have the biggest impact on user experience.

This big-picture view is priceless. It snags those critical bugs that slip past other tests, protecting your brand’s reputation and preventing lost revenue from something like a broken checkout flow. Without it, you’re basically shipping code with your fingers crossed, just hoping all the individual bits and pieces play nicely together in production.

How It Fits into the Bigger Picture

To be clear, E2E testing doesn't replace other testing methods; it completes them. It sits right at the top of the "testing pyramid," which means you’ll have fewer, more powerful E2E tests compared to the hundreds or thousands of unit tests at the bottom.

To help put this in context, here’s a quick breakdown of how end-to-end testing stacks up against other common types.

End to End Testing vs Other Test Types

| Testing Type | Scope | Primary Goal | Best For |

|---|---|---|---|

| Unit Testing | A single function or component | Verify individual pieces of code work correctly in isolation. | Catching bugs early and locally at the smallest level. |

| Integration Testing | Two or more connected components | Ensure different modules or services can communicate with each other. | Finding issues at the seams where different parts of the system meet. |

| End-to-End Testing | The entire application workflow | Validate the full user journey across all integrated systems. | Simulating real-world user scenarios to confirm the whole system delivers value. |

| Functional Testing | Specific features or functions | Confirm that a feature meets its specified business requirements. | Verifying what the system does, feature by feature. |

The goal of E2E testing isn't to cover every tiny edge case. Instead, it’s about validating your most critical user workflows. This is a key difference from other validation methods like functional testing. If you want to dive deeper into how these testing types fit together, you can learn more about functional testing in our detailed guide.

By taking this strategic approach, you gain the most confidence with the least amount of effort, making end-to-end testing a non-negotiable part of any modern software development process.

Integrating E2E Testing into Your Development Cycle

To really get the most out of end-to-end testing, it can't be an afterthought—something you run whenever you remember. The real value comes from weaving these tests right into your development cycle. They become an automated quality gate, a final line of defence that protects your users from nasty bugs.

Think of your development pipeline like a series of quality checkpoints. Unit and integration tests are your early-stage checks, making sure individual components and their immediate connections are solid. E2E tests, on the other hand, act as the final, full-system inspection before you ship. This makes them a perfect fit for a staging or pre-production environment, once all the smaller-scale tests have passed.

Finding the Right Cadence

Trying to run your entire suite of E2E tests on every single code commit would be painfully slow and impractical. The trick is to strike a sensible balance between comprehensive coverage and getting fast feedback to your developers. This is where a tiered approach really shines.

First up, you’ll want to build a small, fast-running suite of smoke tests. These are designed to cover the absolute, non-negotiable user journeys in your application.

- User Registration and Login: Can a new user sign up and actually get into their account?

- Core Feature Usage: Does the main thing your app is supposed to do still work?

- Checkout or Purchase Flow: Can a customer give you their money and complete a transaction?

This essential set of tests can run much more often, say, on every merge to your main development branch. It gives you a quick thumbs-up or thumbs-down if a critical workflow has just been broken. Then, you can schedule your full, more exhaustive end-to-end testing suite to run nightly or as a mandatory step just before a production deployment.

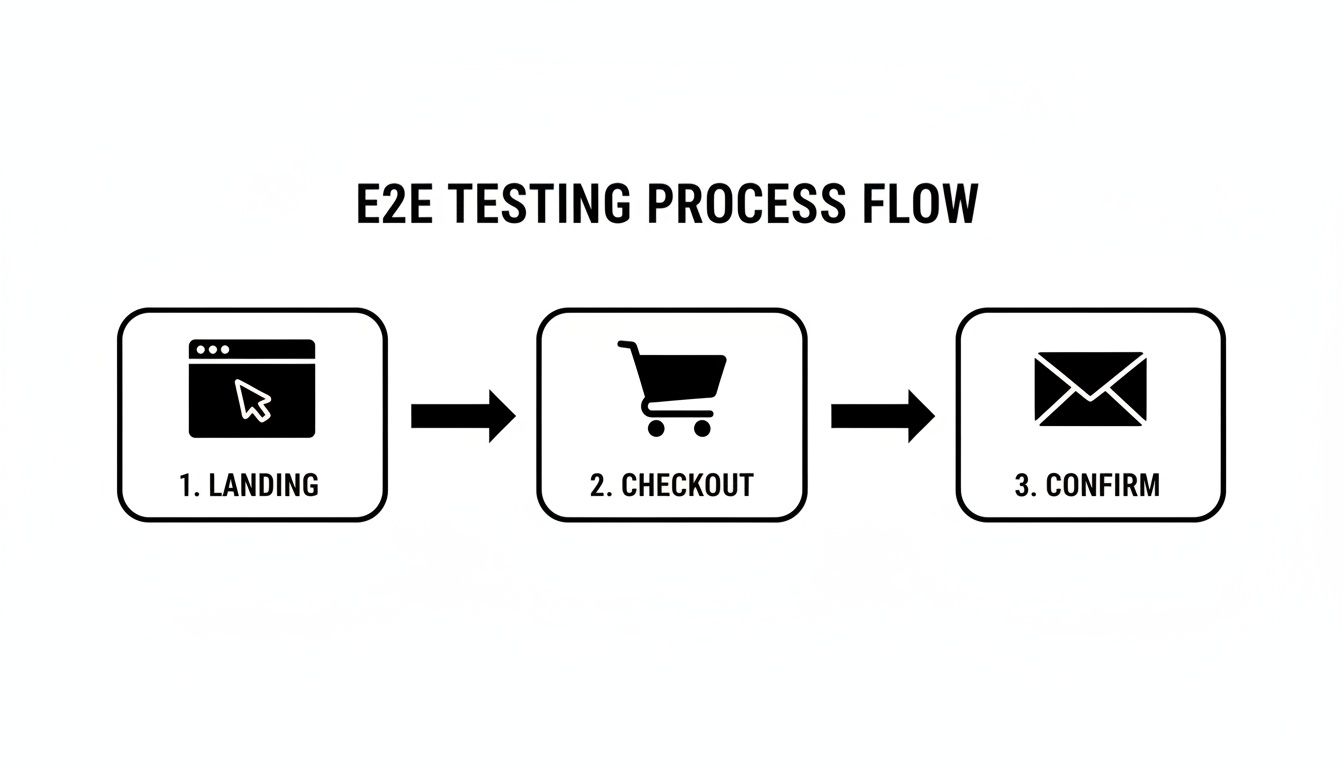

The diagram below shows a classic high-value user flow that would be a prime candidate for a smoke test, tracking the journey from the landing page all the way to a final purchase confirmation.

This kind of process is the lifeblood of an e-commerce site. A failure at any point in this journey directly translates to lost revenue and a frustrated customer.

Automating Go/No-Go Decisions

The real power of integrated E2E testing kicks in when you link the test results directly to your deployment workflow. For DevOps and engineering leads, this means setting up your Continuous Integration/Continuous Deployment (CI/CD) pipeline to make automated go/no-go decisions. If the critical smoke tests fail, the deployment is automatically stopped in its tracks.

By making a successful end-to-end test run a prerequisite for deployment, you build a powerful safety net. It guarantees that new features, no matter how small, can never silently break the core functionality your business depends on.

This level of automation is becoming less of a "nice-to-have" and more of a necessity as development speed continues to increase. The demand for robust testing is exploding. In fact, Australia's software testing services market is projected to grow by USD 1.7 billion between 2024 and 2029. This growth highlights the pain teams feel with old-school testing bottlenecks that kill momentum, pushing them towards more efficient, automated solutions. You can dive deeper into this by exploring the full software testing market research.

By thoughtfully embedding end-to-end testing into your development cycle, you shift from a reactive "bug hunt" to a proactive quality assurance mindset. It ensures that every release is a confident step forward, not a risky gamble. For more ideas on levelling up your development and testing processes, check out other articles on the e2eAgent.io blog.

Why Traditional End-to-End Tests Are So Brittle

While end-to-end testing gives you the ultimate confidence that your app works, the reality for most teams is often one of frustration. The single biggest complaint you'll hear is that E2E tests are notoriously brittle. They seem to break at the slightest provocation, which kicks off a relentless cycle of bug-fixing and maintenance that can swallow up valuable engineering time.

This brittleness is what leads to "flaky" tests—the kind that pass one minute and fail the next, even when there's no actual bug. Think of it like a self-driving car trying to navigate a city where the street signs and road markings change their appearance every single day. The car's core navigation logic is perfectly fine, but because it relies on rigid visual cues, it gets stuck and fails its journey. This is exactly what happens with traditional end-to-end testing.

The Fragility of UI Selectors

So, what's at the heart of this problem? It nearly always comes down to UI selectors. Traditional test scripts are hard-coded to find specific things on a page using identifiers like IDs, CSS classes, or XPath. For example, a script might be told to "find the button with the ID 'submit-btn' and click it."

This works beautifully… until a developer makes a minor, seemingly harmless change. Maybe they refactor the code and the ID changes to 'checkout-submit-btn', or a CSS class gets an update as part of a design refresh. The button still does the exact same thing, but because its identifier has changed, the test breaks. The user's journey isn't broken at all, but the test script can no longer find its next step, so it fails.

This creates a massive maintenance headache. It's not uncommon for engineering teams to spend 20-30% of their sprints just hunting down and fixing broken selectors in their test suite, instead of building new features.

Common Causes of Test Brittleness

This constant, unpredictable breaking is usually caused by a few common culprits that can derail development momentum.

- UI and Code Refactors: Even small updates to the HTML structure or CSS styling can invalidate the very specific paths that tests use to find elements.

- Timing and Synchronisation Issues: Modern web apps are dynamic. Content might load in piece by piece, an animation can delay a button's appearance, or network lag can vary. If a test script looks for an element before it has fully loaded on the screen, it will fail due to a simple timing mismatch, not a real bug.

- Dynamic Content: Apps that pull data from databases or APIs can show different content every time the page loads. A test expecting to find the text "Product A" might fail if the database now returns "Product B" as the first item in a list.

This constant fragility undermines the entire point of testing. When teams can no longer trust their test results, they start ignoring failures, which is how real bugs slip through into production.

The pressure to release features quickly just makes this worse. For teams pushing out updates every day, traditional end-to-end testing can become a major bottleneck, with failure rates sometimes hitting 30-50% in highly dynamic applications. This pain point is driving a major shift in the industry towards AI-powered solutions. Small teams can now automate more reliably without needing deep coding expertise, with industry trends showing potential cost reductions of up to 40%. You can learn more about the growing AI-enabled testing market in Australia and its local impact.

Beyond Flakiness: Other Hidden Costs

While brittle selectors are the main issue, other challenges also make traditional E2E testing a costly exercise for agile teams.

Slow execution speed is a big one. Simulating a full user journey inside a real browser is naturally much slower than running isolated unit tests. A large suite of E2E tests can take hours to finish, delaying feedback for developers and slowing down the whole deployment pipeline.

On top of that, just setting up and maintaining a stable test environment is a complex job. It requires you to replicate the production environment as closely as possible, including all its databases, third-party APIs, and microservices. Any small inconsistency between the test and production environments can lead to tests that pass locally but fail in the real world, further chipping away at your team's trust in the process.

Practical Examples of Critical E2E Test Scenarios

Theory is great, but let's get practical. Seeing end-to-end testing in action is where its value really clicks. The real power of E2E testing shines when you apply it to the critical user journeys that your business relies on.

Think of these scenarios less like code and more like plain-English stories. Each one follows a user’s path from start to finish, touching multiple parts of your application along the way. Here are a few common examples that are absolutely vital for almost any modern web application.

E-commerce Customer Purchase Journey

For any online store, the checkout flow is the absolute king. A bug here isn't just a minor hiccup; it's a direct hit to your bottom line. An E2E test for this journey makes sure every single step, from browsing to buying, works flawlessly.

This one test has to validate a whole stack of systems working together: the frontend UI, shopping cart logic, inventory management, discount engine, payment gateway integration, and even the final order confirmation system.

A typical test would walk through these steps:

- Navigate to a product page. The test checks that the product details, images, and price all show up as expected.

- Add multiple items to the cart. It then confirms the cart icon updates correctly and the items are listed in the cart summary.

- Apply a discount code. The test enters a valid code and verifies that the subtotal and final price recalculate properly.

- Proceed to checkout. It then moves to the checkout page, fills in the shipping and payment details.

- Complete the purchase. Finally, the test simulates the final click, makes sure the payment is processed, and confirms the user lands on the "Thank You" or order confirmation page.

SaaS Product New User Sign Up

If you're running a Software-as-a-Service (SaaS) business, getting new users in the door is everything. If a potential customer can’t create an account and log in, your product might as well not exist for them. This E2E test validates the entire onboarding funnel, from registration and email verification right through to that first successful login.

This single test scenario checks the crucial handshake between your web app, your user database, and your third-party email service. A failure in any one of these components would be caught instantly.

Let's break down the journey for this critical end-to-end testing scenario.

The steps look something like this:

- Land on the sign-up page. The test kicks off right where your user would, on the registration form.

- Fill out and submit the form. It enters a unique email, a strong password, and any other required details before hitting submit.

- Check for a verification email. This is the tricky part—the test needs a way to peek into an inbox, find the automated welcome email, and grab the verification link.

- Complete the verification. It then "clicks" that link to activate the new account.

- Log in with the new credentials. To finish, the test goes back to the login page, enters the new user's details, and confirms it can successfully get to the application dashboard.

User Password Reset Flow

Forgetting a password shouldn't be a dead end for a user. A broken password reset flow is a surefire way to lock people out of their accounts for good, leading to major frustration and customer churn. This E2E test ensures users can securely and easily get back into their accounts.

This workflow is mission-critical for user retention and security. It tests the user lookup feature, secure token generation, email delivery, and the final password update mechanism all in one go.

An automated test would run through these actions:

- Navigate to the login page and click "Forgot Password".

- Enter a registered user's email address to get the process started.

- Verify receipt of the password reset email. Just like the sign-up test, it needs to check an inbox for the email.

- Follow the reset link from the email, which should lead to the password reset form.

- Enter and confirm a new password and submit the form.

- Attempt to log in using the brand-new password to confirm the whole process worked.

The Future Is AI-Powered End-to-End Testing

For any fast-moving product team, the endless cycle of fixing brittle, selector-based tests is a familiar headache. This isn't just a minor annoyance; the maintenance burden actively slows down development and gets in the way of shipping features quickly. But we're seeing a fundamental shift in how testing is done, moving away from rigid, coded instructions and towards intelligent, goal-oriented automation.

The new approach is all about using AI to completely rethink how we create and run our end-to-end tests. Instead of telling a script exactly how to find and click a button using a specific ID or CSS class, you simply describe what a user is trying to achieve in plain English. This is the simple but powerful idea behind AI-driven testing agents.

From Brittle Scripts to Intelligent Agents

Let's say you want to test your checkout flow. The old way involved writing a script that looked something like this: "Find the element with ID 'product-add-to-cart-button' and click it." The new way is far more intuitive. It’s simply: "Add the 'Premium Widget' to the cart."

An AI agent takes this natural language instruction and figures out how to navigate the application to get the job done, much like a human tester would. It understands the context of the page, identifies the right elements based on their meaning and purpose, and carries out the necessary steps.

This screenshot from e2eAgent.io shows exactly what this looks like in practice. On the left, you've got a plain-English instruction, and on the right, you can see the AI agent executing it in a real browser.

The real magic here is the separation of intent from implementation. The user's goal—"add item to cart"—doesn't change, even if the underlying code or UI design does. This makes the test incredibly resilient.

This simple change gets right to the heart of what makes tests flaky. When a developer refactors the UI and changes a button's ID, a traditional script breaks instantly. An AI agent, however, just adapts. It can still recognise the button's purpose through other clues like its text, its position on the page, or its role in the form, and completes the action without a fuss.

This adaptive capability is the defining feature of AI-powered testing. It transforms test maintenance from a constant, reactive chore into a rare, exception-based task.

Democratising Test Automation

Another huge win for this approach is how accessible it makes automation. You no longer need to be an expert in a specific coding language or testing framework to create robust automated tests. Now, anyone on the team, from product managers to manual QA testers, can help build out the automation suite just by describing user journeys in simple terms.

This opens up some powerful new possibilities:

- Faster Test Creation: Writing a few lines of English is exponentially faster than coding a complex test script. This means teams can build comprehensive test coverage in a fraction of the time it used to take.

- Reduced Maintenance Overhead: Because the tests aren't tied to fragile UI selectors, they break far less often. This frees up your engineers to focus on building the actual product instead of fixing tests.

- Empowering Small Teams: Startups and small engineering teams can now achieve a level of automation that was previously only possible for large companies with dedicated QA engineering departments.

This technological shift is happening at a time of significant market growth. In Australia, the software testing services market is forecast to grow by USD 1.698 billion between 2024 and 2029. This boom is being driven by end-user demands that require better, more reliable automation. For small Aussie SaaS firms, where engineering teams of 5-10 people often lose 20-30% of their sprints to fixing flaky tests, AI agents are a genuine game-changer. You can dig into the numbers in this comprehensive market analysis.

By moving beyond code-based instructions, AI isn't just improving end-to-end testing; it's making it more resilient, accessible, and truly aligned with the pace of modern software development. Platforms that embrace this philosophy, like e2eAgent.io, where you can turn English descriptions into executed tests, are leading the charge in helping teams ship with greater speed and confidence.

Got Questions About End-to-End Testing? We’ve Got Answers.

Even after you get the hang of the basic principles, you’ll inevitably run into practical questions when it’s time to actually implement end-to-end (E2E) testing. This section tackles some of the most common queries we hear from developers, QA pros, and product managers. My goal is to give you clear, direct answers to help you build a smarter testing strategy.

How Many End-to-End Tests Should We Really Have?

Look, there’s no single magic number here. Instead of chasing a specific count, it’s much smarter to follow the classic "Test Pyramid" model. Think of it this way: you want a wide base of fast, simple unit tests, a smaller middle layer of integration tests, and just a select few, high-impact E2E tests sitting right at the top.

Your focus for E2E testing should be squarely on your most critical business workflows. These are the "happy paths" that absolutely must work for your application to deliver any value.

Ask yourself, what are the user journeys that make or break our business?

- Can a new user sign up and log in without a hitch?

- Is a customer able to complete a purchase from start to finish?

- Does our product's core, signature feature actually work as promised?

A great starting point is to aim for 10-20 robust E2E tests that cover these vital flows. Honestly, it's far better to have a small, stable suite of tests that everyone trusts than hundreds of flaky ones the team just ends up ignoring. Prioritise stability and genuine value over sheer quantity.

What's the Real Difference Between E2E and Integration Testing?

This is a classic point of confusion, but the distinction is crucial for getting it right. Integration testing is all about making sure different modules or services within your application can talk to each other correctly. For instance, it checks if your user service can properly pull data from the customer database when the API asks for it. It’s about ensuring the internal plumbing is connected and working.

End-to-end testing, on the other hand, has a much wider view. It validates an entire user workflow from their perspective, simulating a real journey through the user interface (UI) and across all the different systems involved.

Here’s a simple analogy: Integration testing is like checking that all the pipes in your house are connected properly. End-to-end testing is turning on the kitchen tap to make sure water flows all the way from the main line, through the pipes, and out into the sink. It proves the whole system delivers the final result.

So, while integration tests check if the internal bits can communicate, E2E tests confirm that the entire system works together to deliver a complete, successful user experience.

Can We Just Use End-to-End Tests and Ditch the Rest?

In a word: no. While E2E tests are incredibly powerful, they are also the slowest, most resource-heavy, and most fragile type of test you'll ever write and maintain. Trying to use them to catch every little bug would be wildly inefficient and create a massive bottleneck in your development pipeline.

A balanced and effective testing strategy uses each test type for what it does best.

- Unit Tests are brilliant for checking isolated bits of logic inside a single function or component. They’re fast, stable, and pinpoint the exact location of a bug in the code.

- Integration Tests are perfect for making sure different microservices or internal modules can exchange data and work together as designed.

- End-to-End Tests act as the final quality checkpoint, confirming that complete, business-critical user journeys function correctly in an environment that mimics production.

Together, these layers form a solid safety net. Each type of test covers a different kind of risk, and if you remove one, you’re leaving significant gaps in your quality assurance.

How on Earth Do I Handle Test Data for E2E Tests?

You’ve hit on one of the biggest challenges in creating reliable end-to-end testing suites. The whole point is to make sure every single test starts from a clean, predictable, and isolated state. If your tests depend on data that can be changed by other tests or outside factors, they will become flaky and completely untrustworthy.

The best practice is to manage your test data programmatically for every single test run.

This usually breaks down into two key steps:

- Seeding Data: Before a test kicks off, use an API or a script to create the specific data it needs. For example, a "user login" test should start by programmatically creating a brand-new test user in the database just for that run.

- Tearing Down Data: After the test is done (whether it passes or fails), the data it created should be wiped clean. This leaves the environment spotless for the next test.

Whatever you do, avoid relying on a persistent, shared test database where data from old runs just sits there. That’s a recipe for disaster, leading to bizarre failures when, for instance, a test tries to register a username that was already created an hour ago. Using libraries to generate fake but realistic data (like names and email addresses) for each test is also a huge help in making sure your tests are independent and repeatable every time.

Ready to stop wasting time on flaky tests? With e2eAgent.io, you can ditch the endless maintenance of fragile test scripts. Just describe your user journeys in plain English, and let our AI agent handle the rest. Build resilient E2E tests in minutes at e2eAgent.io and get back to shipping features your users love.